I think a substantial part of the problem is the employee turnover rates in the industry. It seems to be just accepted that everyone is going to jump to another company every couple years (usually due to companies not giving adequate raises). This leads to a situation where, consciously or subconsciously, noone really gives a shit about the product. Everyone does their job (and only their job, not a hint of anything extra), but they’re not going to take on major long term projects, because they’re already one foot out the door, looking for the next job. Shitty middle management of course drastically exacerbates the issue.

I think that’s why there’s a lot of open source software that’s better than the corporate stuff. Half the time it’s just one person working on it, but they actually give a shit.

Definitely part of it. The other part is soooo many companies hire shit idiots out of college. Sure, they have a degree, but they’ve barely understood the concept of deep logic for four years in many cases, and virtually zero experience with ANY major framework or library.

Then, dumb management puts them on tasks they’re not qualified for, add on that Agile development means “don’t solve any problem you don’t have to” for some fools, and… the result is the entire industry becomes full of functionally idiots.

It’s the same problem with late-stage capitalism… Executives focus on money over longevity and the economy becomes way more tumultuous. The industry focuses way too hard on “move fast and break things” than making quality, and … here we are, discussing how the industry has become shit.

Shit idiots with enthusiasm could be trained, mentored, molded into assets for the company, by the company.

Ala an apprenticeship structure or something similar, like how you need X years before you’re a journeyman at many hands on trades.

But uh, nope, C suite could order something like that be implemented at any time.

They don’t though.

Because that would make next quarter projections not look as good.

And because that would require actual leadership.

This used to be how things largely worked in the software industry.

But, as with many other industries, now finance runs everything, and they’re trapped in a system of their own making… but its not really trapped, because… they’ll still get a golden parachute no matter what happens, everyone else suffers, so that’s fine.

Exactly. I don’t know why I’m being downvoted for describing the thing we all agree happens…

I don’t blame the students for not being seasoned professionals. I clearly blame the executives that constantly replace seasoned engineers with fresh hires they don’t have to pay as much.

Then everyone surprise pikachu faces when crap is the result… Functionally idiots is absolutely correct for the reality we’re all staring at. I am directly part of this industry, so this is more meant as honest retrospective than baseless namecalling. What happens these days is idiotry.

The article is very much off point.

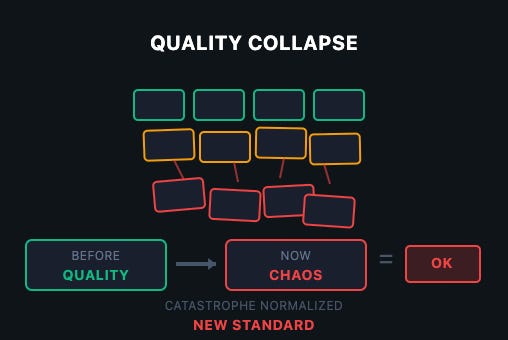

- Software quality wasn’t great in 2018 and then suddenly declined. Software quality has been as shit as legally possible since the dawn of (programming) time.

- The software crisis has never ended. It has only been increasing in severity.

- Ever since we have been trying to squeeze more programming performance out of software developers at the cost of performance.

The main issue is the software crisis: Hardware performance follows moore’s law, developer performance is mostly constant.

If the memory of your computer is counted in bytes without a SI-prefix and your CPU has maybe a dozen or two instructions, then it’s possible for a single human being to comprehend everything the computer is doing and to program it very close to optimally.

The same is not possible if your computer has subsystems upon subsystems and even the keyboard controller has more power and complexity than the whole apollo programs combined.

So to program exponentially more complex systems we would need exponentially more software developer budget. But since it’s really hard to scale software developers exponentially, we’ve been trying to use abstraction layers to hide complexity, to share and re-use work (no need for everyone to re-invent the templating engine) and to have clear boundries that allow for better cooperation.

That was the case way before electron already. Compiled languages started the trend, languages like Java or C# deepened it, and using modern middleware and frameworks just increased it.

OOP complains about the chain “React → Electron → Chromium → Docker → Kubernetes → VM → managed DB → API gateways”. But he doesn’t even consider that even if you run “straight on bare metal” there’s a whole stack of abstractions in between your code and the execution. Every major component inside a PC nowadays runs its own separate dedicated OS that neither the end user nor the developer of ordinary software ever sees.

But the main issue always reverts back to the software crisis. If we had infinite developer resources we could write optimal software. But we don’t so we can’t and thus we put in abstraction layers to improve ease of use for the developers, because otherwise we would never ship anything.

If you want to complain, complain to the mangers who don’t allocate enough resources and to the investors who don’t want to dump millions into the development of simple programs. And to the customers who aren’t ok with simple things but who want modern cutting edge everything in their programs.

In the end it’s sadly really the case: Memory and performance gets cheaper in an exponential fashion, while developers are still mere humans and their performance stays largely constant.

So which of these two values SHOULD we optimize for?

The real problem in regards to software quality is not abstraction layers but “business agile” (as in “business doesn’t need to make any long term plans but can cancel or change anything at any time”) and lack of QA budget.

Accept that quality matters more than velocity. Ship slower, ship working. The cost of fixing production disasters dwarfs the cost of proper development.

This has been a struggle my entire career. Sometimes, the company listens. Sometimes they don’t. It’s a worthwhile fight but it is a systemic problem caused by management and short-term profit-seeking over healthy business growth

The sad thing is that velocity pays the bills. Quality it seems, doesn’t matter a shit, and when it does, you can just patch up the bits people noticed.

This is survivorship bias. There’s probably uncountable shitty software that never got adopted. Hell, the E.T. video game was famous for it.

Removed by mod